The landscape of artificial intelligence (AI) risk and regulation is undergoing a significant transformation, marked by the European Union’s introduction of the world’s first comprehensive AI law, the EU AI Act, and complemented by President Biden’s Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence in the United States.

These developments signify a pivotal shift towards establishing a regulatory framework that balances innovation with ethical considerations, data protection, and societal safety. Here, we delve into the core aspects of both the EU AI Act and the Biden administration’s executive order, highlighting their implications for risk, resilience, security, and compliance teams.

The EU AI Act: A New Precedent in AI Regulation

The EU AI Act sets a global benchmark for AI regulation, addressing the nuanced challenges posed by foundational models and the potential stifling of innovation. With the final policy progressing through the final steps of adoption early this year, the Act outlines a future where AI systems are categorized and regulated based on their risk levels, from limited to high risk, with specific prohibitions on systems posing clear threats to fundamental rights.

The EU AI Act applies to a wide range of organizations, including those involved in the development, use, import, distribution, or manufacturing of AI models, regardless of their geographic location.

Key sectors and applications impacted by the Act include insurance, banking, private equity, technology and telecommunications, healthcare, education, public services, and media. These sectors must prepare for strict regulatory requirements, including inventorying AI models, classifying them by risk, and adhering to compliance measures for high-risk systems.

Key Highlights of the EU AI Act:

The Act adopts the globally recognized OECD standard for AI systems, facilitating a universal understanding of the scope of regulated technologies.

Regulatory sandboxes and real-world testing environments will support the development of innovative AI within a safe and supportive framework.

New administrative structures, including an AI Office and a scientific panel, will oversee advanced AI models and foster standard development and enforcement across the EU.

Organizations could face fines ranging from €7.5 million or 1.5% of global turnover to €35 million or 7% of global turnover for violations. The AI Act is expected to be formally accepted early this year, becoming applicable two years post-publication, with certain provisions effective sooner.

The regulation is designed to address systemic risks posing the greatest threat:

- Risk-Based Approach: AI systems will be classified based on their risk to individuals and society, with stringent obligations for high-risk systems, including mandatory impact assessments, data governance, and transparency requirements.

- Regulation of General Purpose AI (GPAI) Systems: Special provisions for general-purpose AI systems aim to ensure transparency and mitigate systemic risks, requiring detailed documentation and adherence to specific obligations.

- Bans on Certain AI Systems: The Act prohibits AI systems that compromise fundamental rights, such as biometric categorization based on sensitive characteristics and untargeted scraping for facial recognition databases.

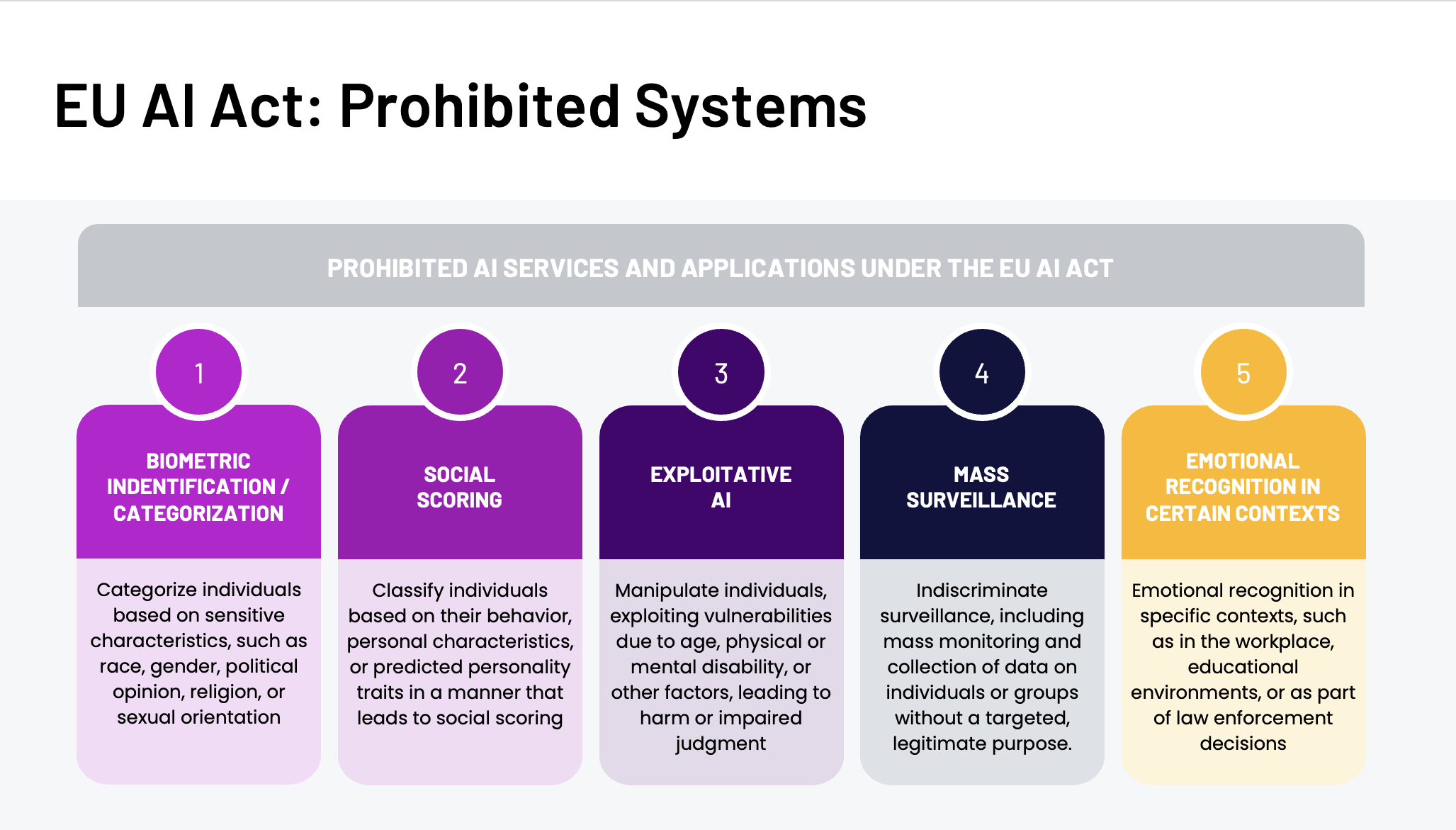

The EU AI Act introduces specific prohibitions on the use of AI in certain types of services and applications. These prohibitions protect individuals from the invasive or manipulative capabilities of AI technologies.

The types of AI services and applications that are explicitly prohibited under the Act include:

Biometric Identification

The use of AI for real-time remote biometric identification systems in publicly accessible spaces for law enforcement purposes is severely restricted, with certain exceptions under strict conditions (e.g., serious crime prevention). The Act also bans AI systems that categorize individuals based on sensitive characteristics, such as race, gender, political opinion, religion, or sexual orientation, particularly when used in ways that could lead to discrimination or harm.

Social Scoring

The AI Act prohibits AI systems that evaluate or classify individuals based on their behavior, personal characteristics, or predicted personality traits in a manner that leads to social scoring. This includes systems that could result in unjust or discriminatory treatment of individuals or groups, affecting their access to services or opportunities.

Exploitative AI

AI applications designed to manipulate individuals, exploiting vulnerabilities due to age, physical or mental disability, or other factors, leading to harm or impaired judgment, are banned. This aims to protect consumers and citizens from AI that could coerce, deceive, or otherwise harm individuals.

Mass Surveillance

The Act prohibits AI systems used for indiscriminate surveillance, including mass monitoring and collection of data on individuals or groups without a targeted, legitimate purpose.

Emotion Recognition and Biometric Categorization in Certain Contexts

The use of AI for emotion recognition or biometric categorization in specific contexts, such as in the workplace, educational environments, or as part of law enforcement decisions, is banned when it infringes on individuals’ rights or leads to discrimination.

It’s imperative for teams to ensure their services do not employ AI in any of the prohibited manners outlined by regulatory frameworks such as the EU AI Act.

2023 US Executive Order on AI: Fostering Innovation and Ethical Governance

Parallel to the EU’s legislative efforts, President Biden’s Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence emphasizes the United States’ commitment to leading in AI innovation while ensuring responsible development and use.

It focuses on research, development, civil rights, privacy protection, and the establishment of international AI standards.

Key Highlights of the 2023 Executive Order on Artificial Intelligence:

- Enhanced Inter-Agency Collaboration: The order mandates a cooperative approach among U.S. government agencies, the private sector, and academia, highlighting the necessity for shared guidelines and standards.

- Privacy and Security Prioritization: Emphasis on AI security and privacy necessitates robust measures to protect data and ensure ethical use.

- Ethical AI Development: The directive underscores the importance of developing AI in an ethical, accountable, and transparent manner, aligning with global standards.

AI Risk and Compliance: Deliver for Today, Design for Tomorrow

As the landscape of AI regulation evolves with the EU AI Act and related global policies, risk, resilience, security, and compliance teams have a crucial role in preparing their organizations for the changes ahead.

Here are some 5 practical steps teams can deliver for what’s required today, while designing for a a horizon still taking shape:

1. Conduct a Comprehensive AI Audit

Inventory AI Systems: Start with cataloging all AI applications and systems in use across the organization to understand the scope of impact. This includes identifying which systems might fall under the definition of “high-risk” AI under the EU AI Act and Biden’s executive order.

Risk Assessment: Perform risk assessments to evaluate how AI systems could affect individuals’ rights, safety, and the environment. This will help classify AI systems according to the risk-based approach outlined in the EU AI Act.

2. Enhance AI Governance Frameworks

Update Policies and Procedures: Revise existing governance frameworks to include AI-specific considerations, ensuring they align with the new regulations. This may involve developing new policies on data governance, transparency, human oversight, and ethical AI use.

Establish AI Ethics Guidelines: Create or refine AI ethics guidelines that comply with both the EU AI Act and principles highlighted in Biden’s executive order, emphasizing fairness, accountability, and transparency.

3. Implement Data Governance and Security Measures

Data Protection: Ensure robust data governance practices are in place, especially for AI systems that process personal data, aligning with GDPR requirements and the data governance requirements of the EU AI Act.

Security Protocols: Strengthen cybersecurity measures around AI systems, focusing on protecting data integrity, ensuring system robustness, and safeguarding against unauthorized access.

4. Plan for Human Oversight

Integrate Human Oversight: Develop mechanisms for human oversight in AI decision-making processes, particularly for high-risk AI applications. This includes establishing clear procedures for human intervention and reviewing AI-generated decisions.

Training and Awareness: Provide training for staff involved in AI development and deployment to understand their roles in monitoring AI systems and ensuring compliance with ethical standards and regulations.

5. Foster Transparency and Accountability

Documentation and Reporting: Maintain comprehensive documentation for AI systems, including development processes, data sources, and decision-making frameworks. This is essential for demonstrating compliance with the EU AI Act’s transparency requirements.

Stakeholder Engagement: Communicate openly with stakeholders about AI use, including customers, employees, and regulators. This involves explaining the purpose of AI systems, their benefits, and how risks are being managed.

Leveraging Microsimulations for AI Risk Management

Microsimulations are interactive, bite-sized simulations that model the behavior and interactions of people in a complex system. These 15-30 minute exercises can play a pivotal role in enhancing the risk management strategies of risk, resilience, security, and compliance teams, particularly when safeguarding the use of AI in critical business services.

Here’s how microsimulations can be instrumental in navigating the challenges and ensuring the responsible use of AI technologies:

Understanding Complex AI Interactions

- System Behavior Analysis: Microsimulations can model how AI systems interact within the broader operational context of a business. By simulating the behavior of AI-driven processes and their interactions with people and other systems, organizations can better understand potential risks and vulnerabilities.

- Impact Analysis: Microsimulations enable the assessment of AI systems’ impacts on critical business services across various scenarios, including stress conditions and threat landscapes.

Enhancing Decision-making and Policy Development

- Policy Testing: Before implementing AI governance policies or deploying AI systems in critical areas, Microsimulations allow organizations to test the effectiveness of these policies in a controlled, virtual environment. This can highlight which policies are most effective at managing risks associated with AI applications, guiding more informed decision-making.

- Scenario Planning: Microsimulations enable organizations to explore various “what-if” scenarios, assessing how AI systems might respond to changes in the operational environment, regulatory updates, or emerging threats. This aids in developing flexible strategies that can adapt to future challenges.

Improving AI System Design and Performance

- Design Optimization: By simulating the performance of AI systems under a range of conditions, developers can identify optimizations to improve reliability, efficiency, and safety before full-scale implementation. This iterative testing and refinement process is crucial for AI systems that support critical business functions.

- Validation of Safety and Reliability: Microsimulations provide a framework for validating the safety and reliability of AI systems, demonstrating that they perform as intended and adhere to ethical and regulatory standards. This validation is essential for building trust among stakeholders and ensuring compliance.

Facilitating Training and Preparedness

- Training Programs: Microsimulations bring compliance training to life, helping them understand the potential impacts of AI decisions and how to intervene effectively. This supports the human-in-the-loop approach, ensuring that teams are prepared to oversee AI systems responsibly.

- Disaster Preparedness: By simulating crisis situations where AI systems might fail or be compromised, organizations can better prepare their response strategies. This enhances overall resilience, ensuring that critical services can be maintained even in the unexpected.

Supporting Regulatory Compliance and Ethical Considerations

- Compliance Checks: Organizations can use microsimulations to ensure that AI applications comply with regulatory requirements, including those outlined in the EU AI Act. Simulating the operation of AI systems under the specific constraints of the law helps identify any areas of non-compliance.

- Ethical Analysis: Microsimulations can also be used to explore the ethical implications of AI systems, ensuring that their deployment aligns with organizational values and societal norms. This is particularly important for AI applications that have significant impacts on individuals’ rights and privacy.

Soaring into a More Intelligent Future

As teams navigate the evolving regulatory frameworks, such as the EU AI Act, and the ethical considerations inherent in AI deployment, Microsimulations can be a powerful tool for discovery, planning, implementation, training, and testing.

By adopting an iterative test-respond-learn approach as part of a comprehensive AI governance and risk management strategy, we pave the way for a future where AI is deployed safely, ethically, and effectively within the business landscape.

To learn more about how iluminr can help you navigate new terrain shaped by the rapid pace of AI evolution and regulatory compliance, take an AI Microsimulation back to your team, absolutely free. Check out this and many other resources in our Resilience Leader’s AI Toolkit.

Author

Paula Fontana

VP, Global Marketing

iluminr