Gamechangers in Resilience is a series spotlighting the people rethinking how we prepare for disruption – not just with better tools, but with bolder questions.

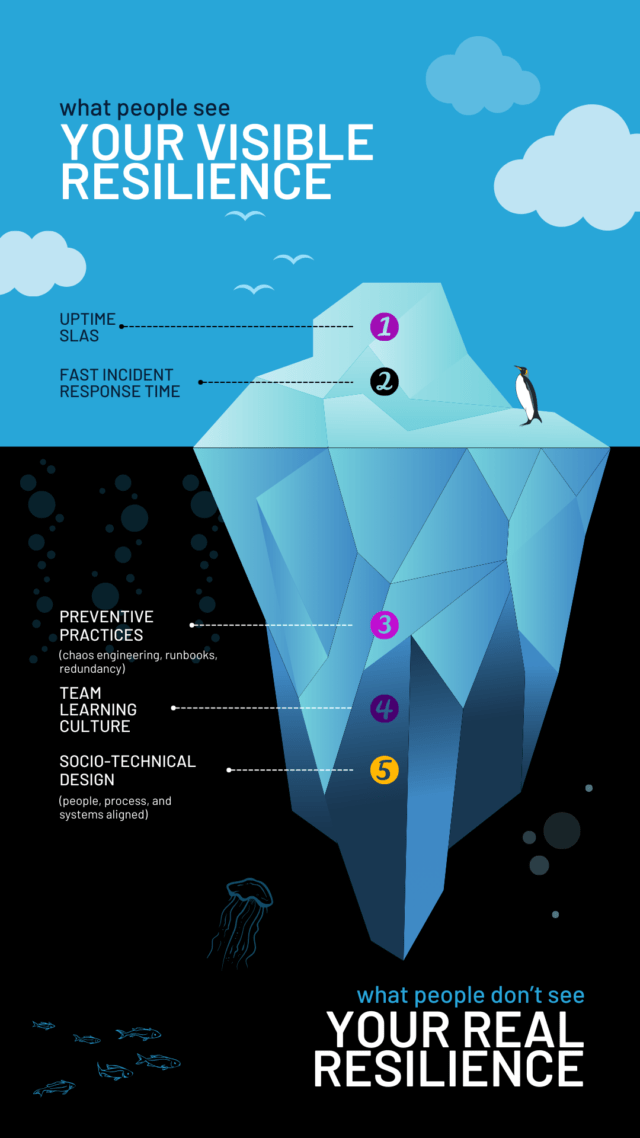

In the world of technical resilience, most focus on uptime.

Adrian Hornsby focuses on what’s beneath it.

After a decade at AWS shaping how global teams approach chaos engineering and incident response, Adrian quietly stepped back – not to disconnect, but to redesign how he shows up. Now running his own consultancy, Resilium Labs, he helps organizations do what most don’t think to measure: build systems – and cultures – that bend without breaking.

In this conversation, Adrian unpacks what resilience really means, why some of the most powerful practices are invisible, and how the best teams don’t just bounce back – they get smarter, safer, and stronger over time.

Q: What’s the most resilient system you’ve encountered outside of tech? What made it resilient?

Adrian: Outside of tech? Probably the human body. Since I live in Finland, I’ll use a very Finnish analogy.

As you probably already know, here in Finland, we love saunas. But we also love what might seem an insane practice to outsiders. We sit in 80-100°C saunas until we’re practically cooking, then run outside to plunge into ice-cold lakes. We actually cut holes in frozen lakes just for this purpose. And I love it. 🙂

What’s interesting isn’t just that almost everybody can survive this temperature shock, but that our bodies actually get used to it and even become stronger from it. The first time you try avanto (ice swimming), it’s brutal. You can barely stand 10 seconds. The shock is just so intense. But with regular exposure, something magical happens. Your body adapts. Your circulation improves. Your cold tolerance builds. You literally become more resilient through controlled exposure to stress. This is remarkably similar to how chaos engineering works in systems. By deliberately exposing our systems to controlled failures, we help them adapt and become more resilient. What makes both systems resilient isn’t avoiding stress; it’s gradually adapting to it.

The best part? This kind of practice doesn’t just help you handle the specific stress you trained for. It generalizes and creates a general hardiness that helps you handle all kinds of unexpected challenges. That’s real resilience.

Q: What do most architecture reviews fail to review?

Adrian: They typically fail to review the “space between” components, the emergent behaviors that come from interactions rather than from individual components.

They also typically fail to understand how the system degrades under partial failures, how the recovery mechanisms and restart behaviors work, state management during transitions, how operators will actually interact with the system, and the implicit assumptions each component makes about others.

Unfortunately, architecture reviews rarely challenge the assumption that components will behave exactly as designed. They don’t examine what happens when services exhibit “gray failures”, when they are not completely failing but are behaving erratically, e.g., with high latency. Finally, they often fail to review whether humans can actually understand the system well enough to operate it effectively, especially during incidents.

The biggest problem occurs when these reviews are unchallenged, for example, without verification through chaos experiments. Without verification, they remain pure assumptions and speculations. Another big problem with architecture reviews is that they are stuck in time. By the time they are completed, the architecture has most likely changed and evolved, which means the review is already outdated. Again, that’s why you want to experiment with your systems continuously; it is the best way to understand how they work now.

Finally, architectural reviews often forget to consider the operational consequences of the design decisions.

Q: Can you share a time when removing a capability increased resilience?

Adrian: I’ve seen a case where removing an automated failover capability actually improved overall system resilience.

The team had built an elaborate automated failover system for a database cluster that detected issues and automatically transitioned to a standby node. In theory, this reduced downtime. In practice, however, it created cascading failures when the automated system made incorrect decisions based on incomplete information. The failover would sometimes trigger during network blips, causing unnecessary disruptions.

Worse, it occasionally failed halfway through, leaving the system in an indeterminate state that required lengthy manual intervention and recovery. The team eventually got fed up with it and replaced it with something way simpler. The team got fewer false positives triggering unnecessary failover, made more deliberate, informed decisions about when to fail over, and gained a clearer understanding of the system state during transitions. Ultimately, it improved availability.

Complexity is often the enemy of resilience, which presents a challenge because achieving simplicity is actually hard work!

As engineers, we naturally gravitate toward over-complicating things – it’s our default mode of operation. It takes deliberate effort to resist the urge to over-engineer.

Q: How do you know when a team is actually resilient, not just their infrastructure?

Adrian: That’s such a great question. I would first say that resilience isn’t just about preventing every possible failure. It’s about how you respond when things inevitably go wrong. That means how well your team can withstand, recover, adapt, and eventually learn. So, first, genuinely resilient teams need to understand this fundamental principle.

But in general, the first sign that a team is improving its resilience is that it has moved beyond the “100% availability trap” mentality. That trap is the (still widespread) fallacy that IT systems are available 100% of the time. Failure will happen regardless of how much money you invest, so resilient teams spend more time working to reduce recovery time rather than trying to prevent failures.

What is interesting is that when failure eventually happens, resilient teams become stronger. They turn it into a learning opportunity, not a blaming exercise. They respond to an incident with curiosity rather than blame. Their first reaction is often “that’s interesting” rather than “who broke it?”. It is actually beautiful to see that in action. Resilient teams also work closely with business stakeholders to determine response priorities rather than making these decisions in isolation. Much of being resilient involves being great at collaborating across different organizations.

Resilient teams understand that people, processes, and technology must work together. Incidents cannot be handled at the technical level alone. That’s one of the most misunderstood principles of resilience: you need to consider the whole socio-technical system, not just the technical components.

Resilient teams practice failure scenarios regularly to develop the muscle memory needed for real incidents. Ideally, you want to make surprises “boring.” That’s where GameDays and chaos engineering come into play.

Truly resilient teams have found a balance between operational efficiency and maintaining the slack resources needed for resilience. Efficiency-obsession and resilience are antagonists. Resilient team members, especially new ones, feel psychologically safe asking questions and challenging assumptions. You will also find that in resilient teams, knowledge isn’t concentrated in a few more “hero” engineers. Everyone is on the same bus. They communicate well during an incident, making sure that the context is well understood and explained. I am sure I could come up with a lot more, but that’s the gist of it.

Q: You’ve said resilience is cultural. What’s one taboo or “unspoken rule” you’ve had to help a team unlearn to make room for resilience?

Adrian: One thing I have had to help many teams unlearn and overcome is the idea that admitting uncertainty is a sign of incompetence. That is actually a big issue in many organizations. Saying I don’t know is hard, really hard, especially for engineers, because the world often expects us to know it all. You are paid well for that. This becomes particularly dangerous during incidents, where people pretend to understand what’s happening rather than acknowledge they don’t understand what’s going on. It prevents the kind of open, collaborative problem-solving that complex failures require.

What you want are teams that actually celebrate uncertainty. You want to be praising engineers when they say, “I’m not sure, let me check that.” You want to encourage teams to imagine how their plans might fail and then verify it with chaos engineering experiments.

And you want to reframe expertise as the ability to be comfortable with uncertainty rather than eliminate it.

Organizations need to realize that resilience doesn’t come from pretending to know everything, but from being prepared to learn and adapt when faced with what you don’t know. And doing that continuously. Every single day.

Q: What’s your favorite uncomfortable question to ask in a postmortem?

Adrian: “What were we afraid to talk about before this problem happened?”

This question addresses the underlying organizational and cultural issues that often precede failures. It shows the knowledge gaps, concerns, and tensions that teams avoid addressing until they become an outage. It’s uncomfortable because it exposes not just technical shortcomings but social ones, too: the way teams communicate (or don’t), how power dynamics silence certain perspectives (the loud voice in the room problem), and how organizational incentives might discourage surfacing potential problems.

The responses often reveal that many people had sensed something was wrong before the incident, but didn’t feel empowered to address it.

Someone might say, “I was worried about our database capacity to scale but didn’t want to be the one putting the launch at risk,” or “I noticed inconsistent monitoring alerts but assumed someone more experienced had already checked.”

The question is powerful because it shifts the conversation from the specifics of the incident to the broader environment that made it possible. It helps teams understand that resilience isn’t just about technical systems but about creating contexts where concerns can be safely raised and addressed before they become incidents.

Q: Which framework do you think needs to be retired or radically rethought?

Adrian: The traditional “root cause analysis” and “5 whys” frameworks need rethinking. They’re based on outdated, linear thinking about failures having single, identifiable causes that can be eliminated. Complex systems never have one single root cause. Instead, they have multiple contributing factors that combine to create failures. They are non-deterministic, so repeating the same things again would lead to a different outcome. That is because systems operate in completely dynamic environments where conditions and context continuously change.

On top of that, the language of “root cause” or “5 whys” is problematic in itself. It suggests a single underlying cause is waiting to be discovered if we dig deep enough, and that you can get to it with 5 Whys. This often leads organizations to implement narrow fixes that don’t address the systemic nature of failures. I used to think that it was just a naming problem and that organizations would not fall into the trap, but I am no longer thinking like that, since having reviewed hundreds of incident postmortems, it is something that is a problem. Not everyone is an expert in incidents, and so many will follow the naming and not dig deeper or bring the context in which the incident happened.

A more useful approach is to focus on understanding how normal work sometimes produces unexpected outcomes. Instead of asking “why did this go wrong?”, we should ask “how did this make sense to everyone involved at the time?” and “what pressures and constraints shaped the environment in which decisions were made?” This shift from finding fault to understanding context is probably the most important for learning from incidents. The goal isn’t to identify THE cause, but to understand the complex interplay of factors that created conditions where failure became possible.

Since it isn’t obvious, let me give you an example I worked on recently. The company had experienced a 2-hour database outage that affected customer access to their accounts, and I was asked to participate in their postmortem. Here’s how the two approaches played out.

At first, they did the five whys. It went something like that:

Why 1: Why did the database go down?

- Because it ran out of storage space.

Why 2: Why did it run out of storage space?

- Because the log files grew too large.

Why 3: Why did the log files grow too large?

- Because log rotation wasn’t functioning properly.

Why 4: Why wasn’t log rotation functioning properly?

- Because the engineer who set it up used incorrect settings.

Why 5: Why did the engineer use incorrect settings?

- Because they weren’t properly trained in database configuration.

Conclusion: We need better training for engineers on database configuration.

We then did the “How” approach. And it went something like that:

Facilitator: How did you first become aware of the issue?

- Ops Engineer: I noticed alerts showing unusual disk usage patterns an hour before the crash, but they weren’t critical alerts, so I was finishing another urgent task first.

Facilitator: How did the system appear to be functioning at that time?

- Ops Engineer: It seemed normal except for the disk usage. We’ve had similar warnings before that resolved themselves, so I wasn’t immediately concerned.

Facilitator: What were you focusing on when making decisions about priorities?

- Ops Engineer: I was trying to balance multiple alerts. Since we typically prioritize customer-facing issues, I was trying to fix the payment processing issue first.

Facilitator: How was the log rotation system originally set up?

- Database Engineer: It was configured during our migration six months ago. We copied settings from our test environment, which had different usage patterns. The rotation was set for weekly rather than daily because test data volumes were much smaller.

Facilitator: How do changes to these systems typically get reviewed?

- Team Lead: We usually have a checklist for infrastructure changes, but during the migration period, we moved quickly to meet deadlines, and some review steps were abbreviated.

Notice the difference? The 5 Whys approach created a linear story ending with “insufficient training”. It implicitly blamed the engineer and missed systemic issues like alert prioritization, workload management, and review processes. Eventually, it led to a narrow solution (more training).

The ‘How’ approach identified that competing priorities impacted response times, and that the alert system failed to differentiate between routine and critical issues. Furthermore, migration pressure had led to shortened safety checks, test environments inadequately reflected production loads, and the team had normalized warning signs. In response, they implemented several improvements: revising alert classification, establishing dedicated maintenance periods, enhancing the infrastructure change review process, creating more accurate test environments, and addressing workload prioritization issues.

The key difference isn’t just in asking better questions. The ‘How’ approach fundamentally recognizes that incidents emerge from complex interactions between people, technology, and organizational factors, rather than from a single cause or person’s mistake.

Q: Have you ever built or followed a playbook that made things worse?

Adrian: Yes, I once worked on an incident response playbook (runbook) that had made several outages last longer than they could have. The playbook was very detailed, with specific steps for different scenarios, checklists, and even escalation paths. In theory, it was very comprehensive. But in practice, it actually became a constraint. During an outage, our team rigidly followed the playbook, even when it became clear that we were dealing with an unfamiliar situation. That prevented creative thinking, which is very important to adaptive response. We were spending more time documenting compliance with the playbook than actually investigating the issue.

After the incident, we rethought our approach by simplifying the playbook to focus on principles rather than strict specific steps, asking questions, and explicitly marking certain sections as “guidelines, not rules”. We also added some decision points where teams were asked to assess whether the playbook was working, with ”exit ramps” where responders could acknowledge deviation from standard procedures. We also changed the training to emphasize information gathering, questioning, and collaboration rather than following a strict procedure.

The big lesson for the team was that resilience comes from adaptability, not rigid adherence to predetermined processes. Good playbooks should enhance human judgment, not replace it.

Real incidents are like playing Jazz; they require improvisation.

Q: When you imagine your legacy, what’s one idea you hope people carry forward 10 years from now?

Adrian: It is ok to be vulnerable.

Q: What’s a resilience lesson you learned from failure that no amount of chaos engineering could’ve taught?

Adrian: I failed many times scaling chaos engineering practices across organizations because of the prevention paradox. That is the idea that the more successful we are at preventing failures, the less visible and valued that prevention work becomes. This paradox creates a perpetual challenge: when your chaos engineering investments work perfectly, the disasters they prevent never materialize, making it appear as though those investments weren’t necessary in the first place.

Organizations then question the resources allocated to prevention, potentially reducing them until the next major incident occurs. I’ve seen this cycle repeat countless times. Teams that successfully prevent outages for extended periods often face budget cuts or shifting priorities away from resilience work, precisely because their success creates the illusion that the threats weren’t real.

Fighting against that paradox is hard. Really hard. But organizations can develop ways to reduce its impact by documenting and simulating the “alternate reality” where preventive measures weren’t taken. They can tell compelling stories about “near misses” that were successfully averted. They can present prevention costs in the context of what they’re preventing, making the value more tangible. They can build institutional memory about why preventive measures exist, even as teams change. And finally, they can recognize and reward the invisible work of prevention as much as the visible work of innovation.

Q: If you had to design resilience from ground up with a new company starting today, what would it look like?

Adrian: From day one, I would start by writing the core tenets for a resilience-focused organization. Writing tenets is something I learned while at AWS. You do that at the start of every project, and it forces you to think carefully about the long-term impact of your decisions. These tenets serve as guiding principles, not rigid rules, helping the team maintain its resilience mindset as it evolves. They would be living principles, reviewed and refined as the team learns and the organization evolves. They would apply equally to how we build systems, how we work together as a team, and how we interact with the broader organization. By doing that from the beginning, we’d create a foundation for resilience that could grow with the organization, rather than having to retrofit resilience later after painful failures.

Here is how I probably would start:

- Embrace failure as a learning opportunity

- We treat every failure as valuable data, not as something to hide or blame

- We celebrate learning from mistakes as much as we celebrate successes

- Design for the real world, not the ideal one

- We assume components will fail and plan accordingly

- We consider all failure modes

- We run regular “failure injection” exercises to build experience and confidence

- We balance resilience with business efficiency needs for each system

- Recovery over prevention

- We optimize for fast recovery

- We practice recovery regularly through GameDays and chaos exercises

- The recovery path is part of the happy path. Active-active is preferred to active-passive systems

- Observability drives understanding

- We invest in observability tools that allow arbitrary questions, not just dashboards

- We measure what matters to users, not just what’s easy to measure

- We ensure business metrics are tied directly to technical metrics

- Simplicity creates resilience

- We value understandable systems over clever ones

- We consciously limit complexity in both code and organizational processes

- We balance resilience measures against added complexity

- Maintain deliberate slack

- We resist the temptation to optimize for high utilization

- We preserve capacity for handling unexpected events

- We allocate time for learning, improvement, and adaptation

- Share operational knowledge

- No one person should be a single point of failure for knowledge

- We document not just what we do, but why we do it

- We rotate responsibilities to build broad operational experience

- Collaborate across boundaries

- We dissolve the boundary between development and operations

- We include business stakeholders in resilience decisions

- We consider the full socio-technical system in our approach

- Continuously adapt

- We regularly reassess and question our decisions and processes

- We evolve our practices based on new learnings

- We treat resilience as dynamic, not a static achievement

- Value business impact

- We align resilience efforts with business priorities

- We express resilience in terms of business outcomes, not just technical metrics

- We make trade-off decisions transparent to all stakeholders